Adolf Würth GmbH & Co. KG

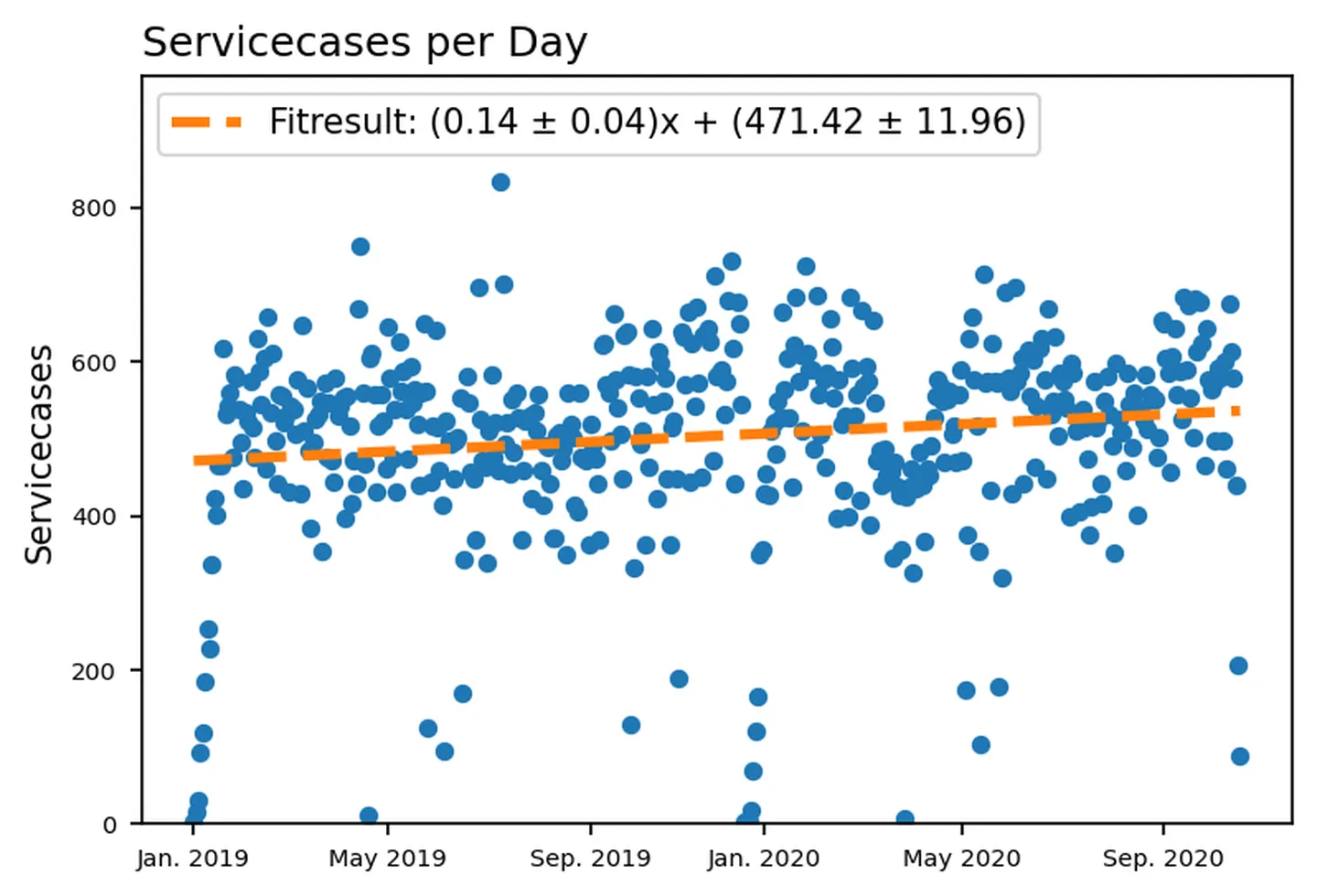

Würth is a specialist for assembly and fastening materials and can look back on a 75-year company history. With over 400 entities, the world's leading B2B company is represented in 80 countries. In addition to its products, Würth also offers planners, configurators, seminars and other services, such as the Würth MASTERSERVICE. Würth maintains, calibrates and repairs around 500 devices per day as part of its MASTERSERVICE. In this context, the data generated on the individual service cases is not only interesting, but essential in order to be able to further optimize the service.